Integrating Data Management and Data Analytics with R and postgreSQL.

Those wanting to be successful in data analytics increasingly have to become well versed in managing their data. That means it is no longer sufficient to just learn R or an analytic platform. You also need to be competent with SQL or some similar database platform.

As I am a huge advocate of open source applications, I will be using postgreSQL although that certainly isn't the only SQL platform that R can work with. I've got R to work with MySQL, Oracle SQL, postgreSQL, and Microsoft Server SQL. I already have a post on how to connect R to these platforms, though I don't get into Microsoft SQL Server because it is a painful (not worth it) process to do this if you are running OSX. (https://www.lazybayesian.com/2019/05/connecting-r-to-sql-database-postgresql.html) You would end up using RODBC package or something similar to get it done but you end up needing to use homebrew to install other tools on your machine to even to get that to work, and it just keeps going. If you are running Windows, connecting to Microsoft SQL Server using RODBC is actually pretty straightforward.

Anyways, In this post I will

The next order of business is to load a legitimate database so you can practice on it and get a feel for using the postgres app along with using R to connect to the postgreSQL session. Like I said, I will be using the United States budget database which is made freely available here. I downloaded the subset data as the full DB file is ENORMOUS. Please note that there is a guide on this webpage that discusses various details and provides some helpful syntax, depending on what you are wanting to do with the data. Once that file is downloaded, proceed to unzip it. When it is unzipped you can use the "pg_restore" function to load that dump file as a database within the application.

The way I did this was to run the pg_restore function within the terminal window. Because OSX doesn't natively know "pg_restore" I had to provide a path to the application so I could call it without doing anything special. I used the following to load the DB.

"pruned_data_store_api_dump" is what I called the unzipped dump folder. That is, the folder that appeared after I unzipped the download. Because I don't have a particular owner or want to set up admin privileges or security options, I used the --no-owner option. Please read the guide provided on the USA budget website if you have further questions about why that was necessary or certain properties of the database.

It may take some time to restore the dump file so please allow multiple hours for this task to run. I ran it overnight. The task ended with some errors, but it hasn't mattered yet, so I don't know what, if anything, that affected. If pg_restore ran successfully, then you will see the new database appear when you open the postgres application. Open the postgres application and double click on the new database to open a terminal window to access it.

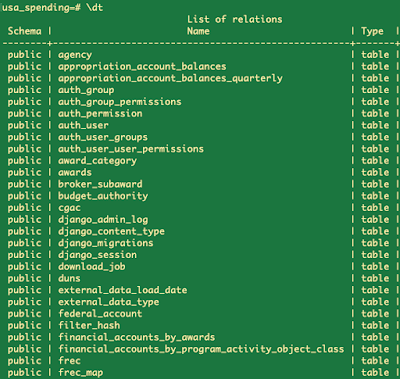

Once in, you can start exploring the database to see all the tables and get information about it.

Because I am not using an IDE, I don't access certain elements of information in a GUI, rather use some postgres commands to get a list of tables in the DB or information regarding a particular table, as demonstrated in the previous images. There is a psql tutorial website that provides good information on how to navigate postgres in this way.

You can write queries directly into the console at this point.

Feel free to explore postgreSQL and the USA spending database. Once you've had your fun, let's move on to getting R to connect to the database session. This way we can access all the data without using the postgres application, and we can seamlessly pull and analyze the information all in R. To get R to connect to the database, you can use the following.

library(RPostgreSQL)

con<-dbConnect(dbDriver("PostgreSQL"), dbname="usa_spending",host="localhost",port="5432")

Please be sure the 'RPostgreSQL' and 'DBI' library are installed. Also ensure that the application/database is running before attempting to connect. A simple oversight if you are running things locally.

In R, your queries can be saved as single string, which object you can pass to the dbGetQuery function, along with the connection information. When you are done, you can disconnect R from postgres with, dbDisconnect(con).

With that, you have all the tools you need to connect to a database and pull that data into R to analyze further. Now you see how R can be used along with a database platform to make R a more powerful tool to address broader data needs.

That is all for this post. If you there is anything you think I glossed over or anything you would like me to address, please let me know in the comments!

As I am a huge advocate of open source applications, I will be using postgreSQL although that certainly isn't the only SQL platform that R can work with. I've got R to work with MySQL, Oracle SQL, postgreSQL, and Microsoft Server SQL. I already have a post on how to connect R to these platforms, though I don't get into Microsoft SQL Server because it is a painful (not worth it) process to do this if you are running OSX. (https://www.lazybayesian.com/2019/05/connecting-r-to-sql-database-postgresql.html) You would end up using RODBC package or something similar to get it done but you end up needing to use homebrew to install other tools on your machine to even to get that to work, and it just keeps going. If you are running Windows, connecting to Microsoft SQL Server using RODBC is actually pretty straightforward.

Anyways, In this post I will

- Discuss acquiring postgreSQL application and provide a few resources for getting started and working in the application.

- Practice with a legitimate database being the USA spending database. I didn't want to work with a toy example, because I don't think that is helpful to people looking to get their feet wet.

- Connect R to the database and query data.

The next order of business is to load a legitimate database so you can practice on it and get a feel for using the postgres app along with using R to connect to the postgreSQL session. Like I said, I will be using the United States budget database which is made freely available here. I downloaded the subset data as the full DB file is ENORMOUS. Please note that there is a guide on this webpage that discusses various details and provides some helpful syntax, depending on what you are wanting to do with the data. Once that file is downloaded, proceed to unzip it. When it is unzipped you can use the "pg_restore" function to load that dump file as a database within the application.

The way I did this was to run the pg_restore function within the terminal window. Because OSX doesn't natively know "pg_restore" I had to provide a path to the application so I could call it without doing anything special. I used the following to load the DB.

/Applications/Postgres.app/Contents/Versions/10/bin/pg_restore --no-owner -d usa_spending /Users/lazybayesian/Downloads/pruned_data_store_api_dump

"pruned_data_store_api_dump" is what I called the unzipped dump folder. That is, the folder that appeared after I unzipped the download. Because I don't have a particular owner or want to set up admin privileges or security options, I used the --no-owner option. Please read the guide provided on the USA budget website if you have further questions about why that was necessary or certain properties of the database.

It may take some time to restore the dump file so please allow multiple hours for this task to run. I ran it overnight. The task ended with some errors, but it hasn't mattered yet, so I don't know what, if anything, that affected. If pg_restore ran successfully, then you will see the new database appear when you open the postgres application. Open the postgres application and double click on the new database to open a terminal window to access it.

Once in, you can start exploring the database to see all the tables and get information about it.

Because I am not using an IDE, I don't access certain elements of information in a GUI, rather use some postgres commands to get a list of tables in the DB or information regarding a particular table, as demonstrated in the previous images. There is a psql tutorial website that provides good information on how to navigate postgres in this way.

You can write queries directly into the console at this point.

Feel free to explore postgreSQL and the USA spending database. Once you've had your fun, let's move on to getting R to connect to the database session. This way we can access all the data without using the postgres application, and we can seamlessly pull and analyze the information all in R. To get R to connect to the database, you can use the following.

library(RPostgreSQL)

con<-dbConnect(dbDriver("PostgreSQL"), dbname="usa_spending",host="localhost",port="5432")

Please be sure the 'RPostgreSQL' and 'DBI' library are installed. Also ensure that the application/database is running before attempting to connect. A simple oversight if you are running things locally.

Please be aware that that host name and port provided in my code will only work if you installed the database locally on your machine. If it resides on a server, then you will need to get the information for the host and port. The admin will have this information. If your database requires a user login, then add user and password options to the dbConnect call. For example,

con<-dbConnect(dbDriver("PostgreSQL"), dbname="usa_spending",host="hostname",port="xxxx",user="myUserName",password="password")

See the R documentation with ?dbConnect for more details. Now you are ready to run queries! I did a quick example query using with the following.

In R, your queries can be saved as single string, which object you can pass to the dbGetQuery function, along with the connection information. When you are done, you can disconnect R from postgres with, dbDisconnect(con).

With that, you have all the tools you need to connect to a database and pull that data into R to analyze further. Now you see how R can be used along with a database platform to make R a more powerful tool to address broader data needs.

That is all for this post. If you there is anything you think I glossed over or anything you would like me to address, please let me know in the comments!

Comments

Post a Comment