Analyzing Text and Sentiment Analysis in R: Amazon Product Review Example

Data analysts don't always have the luxury of having numerical data to analyze. Many times data comes in the form of open text. For example, consumer product reviews or feedback, and comment threads through online merchants or CRM (customer relationship management, e.g. salesforce) portals can all be open text. It's no simple task turning open text into usable information.

Word clouds are one way of approaching this task by highlighting superlative terms. There are a number of word cloud libraries in R, my favorite being "wordcloud2". It outputs an html document that allows you to hover over cloud terms to see its frequency. I mention this because word clouds are so common, however, I won't be spending any more time on this post about them.

In this post I'll be discussing the following:

While some kinds of sentiment analyses attempt to identify a variety of emotions in the data, most attempt to identify text that contains positive or negative expressions. Specifically, how much positive or negative sentiment exist in the text, as well as how positive or negative the sentiment actually is. That is, "really good" versus "just okay".

The R package I'll be using here is the 'qdap' library. There have been infuriating installation issues with this package in the past because it uses 'rJava', but hasn't been an issue in recent versions. Anything having to do with proprietary software like Oracle's Java can often be an enormous pain, because these folks aren't terribly motivated to make anything functional with open-source programs. Problem is qdap has a lot of good qualitative tools, so the battle may be worth it.

The data I will be using for this analysis come from the product reviews on Amazon for the Super NES classic. This is a retro gaming console that Nintendo put out a few years ago. Nintendo has always struggled with business intelligence. They have the tendency to not fully think through the process of a new product release and are often way off the mark when it comes to demand, and the SNES classic's release was no exception. On a personal note, it was Nintendo's severe production neglect and lack of features on this console that drove me to build out a Raspberry Pi retro emulation station. I should probably thank them, because I have never regretted doing that... Anyways, the sentiment here ought to be all over the place.

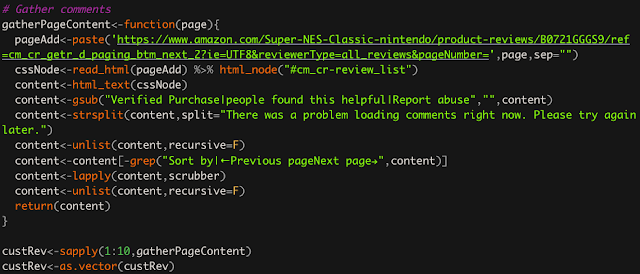

R is very capable of scraping information off the web. There already is a plethora of blog's on this subject so I won't waste time getting in to it. I will, however, provide you with the code I used to acquire the data for the sake of full disclosure and replicability. I'll also talk you through some of it incase you are not familiar with web scraping in R.

I wrote out a function that goes through the pages of the customer reviews and pulls down the text content. I found the html nodes that contained the content I wanted by using a "selector gadget". Enter vignette("selectorgadget") into the console and it will take you through the process of acquiring and using said selector gadget. It's an extension for google chrome, by the way.

There are over 2,000 comments so I sorted them by "top rated" and took the first 100. There are only 10 displayed per page so I had to go through ten different pages to get that many. This is why I wrote a function so I could iterate through the various pages of comments using sapply and not a for loop. I could do this because the url's differ by a single digit for the various pages. Hence the paste function at the first line.

The rest involves my extracting and cleaning the information. The scrubber function is one of many canned functions in the qdap library that help remove excessive spacing, punctuation, or anything unusable for qualitative analysis. I organized the text information to be per review, though to be honest, that likely isn't necessary depending on how you want the sentiment analysis output to be organized.

The end product is a vector of 100 character strings, each being a customer review that include the title of the review.

Once you have an object that contains your text information, go ahead and pass that on to the polarity function. The function itself will provide you with some output.

To get to the most useful output from this function, you need to dig into it a little bit. The output is saved as a list so you can use the names function to get the list elements out of it. I saved my polarity output as pol, and the code below shows you how you can start extracting specific information about polarity scores and what words it found that were either positive or negative.

Digging in to the list elements allows you to get specifics on the various text blocks you provided. This way you can narrow in to the information provided by a particular customer review if you needed to.

You can also create a plot of the sentiment information if you needed to present this to other interested parties.

All in all, I feel like the polarity function is a pretty easy to use and well rounded function for sentiment analysis. It may be worth mentioning that the grouping.var option is pretty handy, too. If you have text information on a number of products, you can use the grouping variable option and you can dig into that information using pol$group. New lines are added to the graphic as well, and does a good job of summarizing and subsetting the information. If you are trying to compare product performance, webpage formats, or whatever it may be, I highly suggest you employ the grouping.var option.

A word of caution. Like most sentiment analyses, it may struggle with and misclassify sarcasm or other non-literal forms of speech. One way to get around this is to provide the polarity function with your own set of sentiment loading words using the polarity.frame option. Experiment with it and you can get it closer to what you want for your specific situation. If you have all the resources in the world you could employ deep learning algorithms to pick that sort of thing off, but that kind of seems like trying to deliver a letter a block away using a ballistic missile. A little overkill, and it may not go the way you anticipate.

Anyways, that will do it for this post. I hope you found this helpful and, as always, I'm open to constructive criticism or additional thoughts so please submit that in the comments. Feel free to ask me any specific questions at this site. Be aware that there is a nominal $1.50 fee to submit questions. That is because it takes time and effort to respond to your questions.

Word clouds are one way of approaching this task by highlighting superlative terms. There are a number of word cloud libraries in R, my favorite being "wordcloud2". It outputs an html document that allows you to hover over cloud terms to see its frequency. I mention this because word clouds are so common, however, I won't be spending any more time on this post about them.

In this post I'll be discussing the following:

- A very brief discussion about extracting online data using 'rvest'.

- Basic options for cleaning text data.

- The polarity function from the qdap package.

While some kinds of sentiment analyses attempt to identify a variety of emotions in the data, most attempt to identify text that contains positive or negative expressions. Specifically, how much positive or negative sentiment exist in the text, as well as how positive or negative the sentiment actually is. That is, "really good" versus "just okay".

The R package I'll be using here is the 'qdap' library. There have been infuriating installation issues with this package in the past because it uses 'rJava', but hasn't been an issue in recent versions. Anything having to do with proprietary software like Oracle's Java can often be an enormous pain, because these folks aren't terribly motivated to make anything functional with open-source programs. Problem is qdap has a lot of good qualitative tools, so the battle may be worth it.

Acquiring Text Data: Amazon Product Review

Firstly, be sure you have the 'rvest' and 'qdap' libraries installed into R. These contain all the essential functions for completing this analysis.The data I will be using for this analysis come from the product reviews on Amazon for the Super NES classic. This is a retro gaming console that Nintendo put out a few years ago. Nintendo has always struggled with business intelligence. They have the tendency to not fully think through the process of a new product release and are often way off the mark when it comes to demand, and the SNES classic's release was no exception. On a personal note, it was Nintendo's severe production neglect and lack of features on this console that drove me to build out a Raspberry Pi retro emulation station. I should probably thank them, because I have never regretted doing that... Anyways, the sentiment here ought to be all over the place.

R is very capable of scraping information off the web. There already is a plethora of blog's on this subject so I won't waste time getting in to it. I will, however, provide you with the code I used to acquire the data for the sake of full disclosure and replicability. I'll also talk you through some of it incase you are not familiar with web scraping in R.

I wrote out a function that goes through the pages of the customer reviews and pulls down the text content. I found the html nodes that contained the content I wanted by using a "selector gadget". Enter vignette("selectorgadget") into the console and it will take you through the process of acquiring and using said selector gadget. It's an extension for google chrome, by the way.

There are over 2,000 comments so I sorted them by "top rated" and took the first 100. There are only 10 displayed per page so I had to go through ten different pages to get that many. This is why I wrote a function so I could iterate through the various pages of comments using sapply and not a for loop. I could do this because the url's differ by a single digit for the various pages. Hence the paste function at the first line.

The rest involves my extracting and cleaning the information. The scrubber function is one of many canned functions in the qdap library that help remove excessive spacing, punctuation, or anything unusable for qualitative analysis. I organized the text information to be per review, though to be honest, that likely isn't necessary depending on how you want the sentiment analysis output to be organized.

The end product is a vector of 100 character strings, each being a customer review that include the title of the review.

Sentiment Analysis (Polarity)

The canned function in qdap is called polarity because you are attempting quantify and analyze how positive or negative the text content is.

Once you have an object that contains your text information, go ahead and pass that on to the polarity function. The function itself will provide you with some output.

To get to the most useful output from this function, you need to dig into it a little bit. The output is saved as a list so you can use the names function to get the list elements out of it. I saved my polarity output as pol, and the code below shows you how you can start extracting specific information about polarity scores and what words it found that were either positive or negative.

Digging in to the list elements allows you to get specifics on the various text blocks you provided. This way you can narrow in to the information provided by a particular customer review if you needed to.

You can also create a plot of the sentiment information if you needed to present this to other interested parties.

All in all, I feel like the polarity function is a pretty easy to use and well rounded function for sentiment analysis. It may be worth mentioning that the grouping.var option is pretty handy, too. If you have text information on a number of products, you can use the grouping variable option and you can dig into that information using pol$group. New lines are added to the graphic as well, and does a good job of summarizing and subsetting the information. If you are trying to compare product performance, webpage formats, or whatever it may be, I highly suggest you employ the grouping.var option.

A word of caution. Like most sentiment analyses, it may struggle with and misclassify sarcasm or other non-literal forms of speech. One way to get around this is to provide the polarity function with your own set of sentiment loading words using the polarity.frame option. Experiment with it and you can get it closer to what you want for your specific situation. If you have all the resources in the world you could employ deep learning algorithms to pick that sort of thing off, but that kind of seems like trying to deliver a letter a block away using a ballistic missile. A little overkill, and it may not go the way you anticipate.

Anyways, that will do it for this post. I hope you found this helpful and, as always, I'm open to constructive criticism or additional thoughts so please submit that in the comments. Feel free to ask me any specific questions at this site. Be aware that there is a nominal $1.50 fee to submit questions. That is because it takes time and effort to respond to your questions.

Comments

Post a Comment